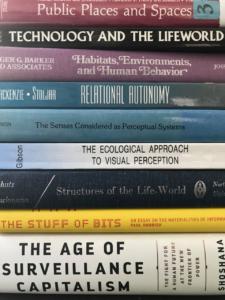

Classic phenomenologists and ecological psychologists have described and analyzed core existential categories of persons, things and environments. These core categories have been seen as essential to our perception and action choices, but also to ethics and values and questions of what it means to be human. Post-phenomenologists like Don Ihde have sought to extend these analyses to better understand our technologically meditated lives and how things also in some sense can be thought to have agency or at least participate in agentic structures. Thus, there has been some re-shuffling of how to see the divisions of the core categories of things and persons. But what about “life-worlds” and environments – what happens when the background takes on agentic properties, or when it becomes a tool to be controlled by others from afar? Peter-Paul Verbeek have called this relation an “immersion” in technology. But where does such an immersion leave our individual and social agency? This question is at the heart of a current project of mine, seeking to analyze surveillance driven algorithmic personalization, which I worked on during my months at the CFS this fall.

Classic phenomenologists and ecological psychologists have described and analyzed core existential categories of persons, things and environments. These core categories have been seen as essential to our perception and action choices, but also to ethics and values and questions of what it means to be human. Post-phenomenologists like Don Ihde have sought to extend these analyses to better understand our technologically meditated lives and how things also in some sense can be thought to have agency or at least participate in agentic structures. Thus, there has been some re-shuffling of how to see the divisions of the core categories of things and persons. But what about “life-worlds” and environments – what happens when the background takes on agentic properties, or when it becomes a tool to be controlled by others from afar? Peter-Paul Verbeek have called this relation an “immersion” in technology. But where does such an immersion leave our individual and social agency? This question is at the heart of a current project of mine, seeking to analyze surveillance driven algorithmic personalization, which I worked on during my months at the CFS this fall.

One aspect that I am particularly interested in is contrasting what we might call old fashioned analog and “dumb” spaces with new “smart” spaces. A smart space can either be entirely digitized interfaces, such as personalized tools and platforms online, or analog spaces transformed by connected and AI-powered IoTs. The key is that we are dealing with spaces and interfaces, which have sensors and effectors, and some kind of algorithmic decision making that can transform the space and the options of the agents behaving in them.